Model Evaluation / Selection / Fits / Validation

Train Test Split

- Before running the model, the data is split into

TrainingandTestingsets. - The training to test split ratio is generally 70:30 and can vary.

- The model is trained on the

Trainingdataset hence the name - Once the model is fit on the data, we use the

Testingdataset to check how the model performs.

The model is best served with a k-fold cross validation set where the data is randomly split multiple k times and each time the model fit is checked.

Validation :

K-fold cross Validation

- Sample is partitioned into k equal sized subsamples.

- A single subsample is used as the validation dataset for testing the model.

- Remaining k-1 subsamples are used as training data.

- The cross validation process is repeated k times.

Advantages

All observations are used for both training and validation.

Each of the k subsamples used exactly once as the validation data.

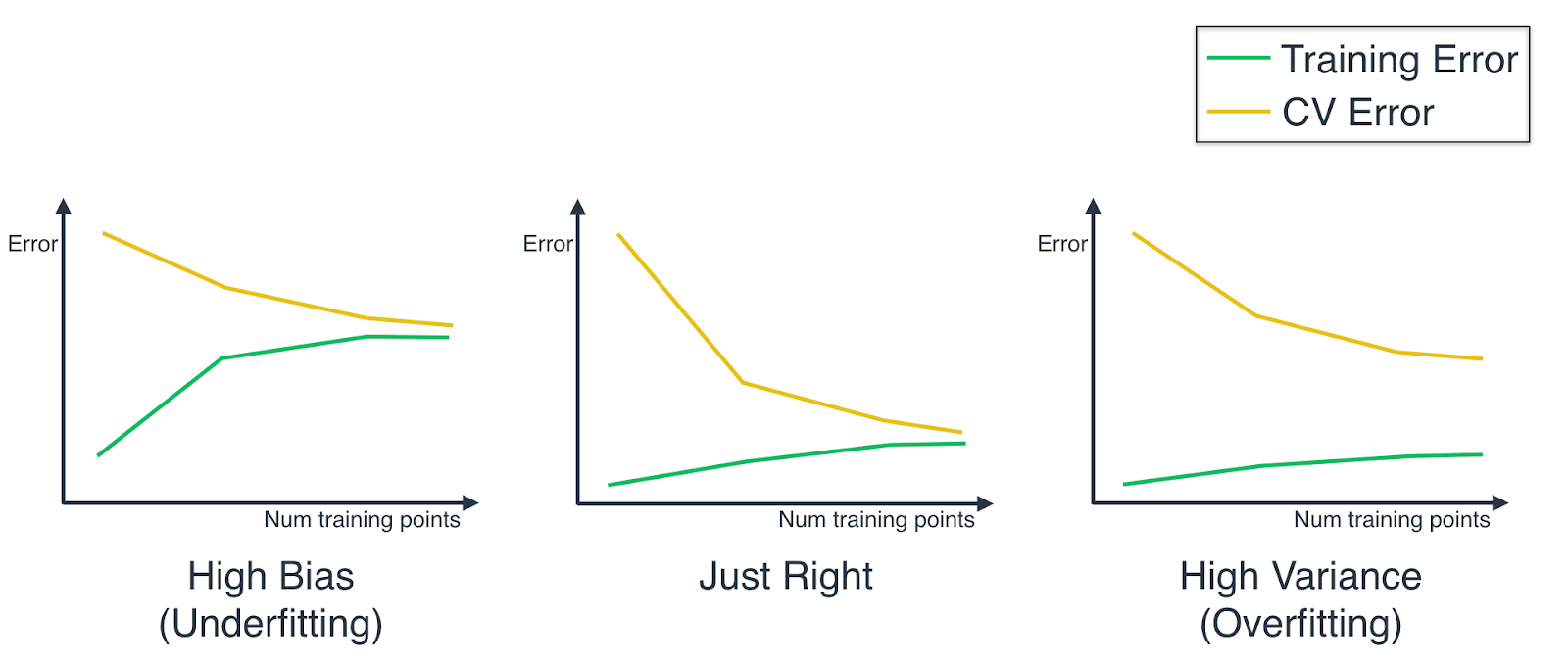

Learning Curves

Grid Search

Technique used to list down all the possibilities of the hyperparameters and pick the best one.

Model Monitoring

Population Stability Index (PSI)

- Sort scoring variable on descending order in scoring sample

- Split the data into 10 or 20 groups (deciling)

- Calculate % of records in each group based on scoring sample

- Calculate % of records in each group based on training sample

- Calculate difference between Step 3 and Step 4

- Take Natural Log of (Step3 / Step4)

- Multiply Step5 and Step6

continue model < 0.1 < slight change < 0.2 < significant change